PANNs: Large-scale Pretrained Audio Neural Networks for Audio Pattern Recognition

Top Reasons to Join SPS Today!

1. IEEE Signal Processing Magazine

2. Signal Processing Digital Library*

3. Inside Signal Processing Newsletter

4. SPS Resource Center

5. Career advancement & recognition

6. Discounts on conferences and publications

7. Professional networking

8. Communities for students, young professionals, and women

9. Volunteer opportunities

10. Coming soon! PDH/CEU credits

Click here to learn more.

PANNs: Large-scale Pretrained Audio Neural Networks for Audio Pattern Recognition

Contributed by Qiuqiang Kong and based on the IEEEXplore® article "PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition" published in the IEEE/ACM Transactions on Audio, Speech, and Language Processing, October 2020 and the SPS Webinar of the same title, available on the SPS Resource Center.

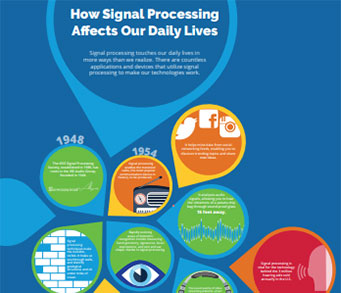

We are surrounded by sounds containing rich information about where we are and what events are happening around us. Audio pattern recognition is the task of recognizing and detecting sound events and scenes in our daily life. Audio pattern recognition is complementary to speech processing [1] and contains several sub-tasks, including audio tagging, acoustic scene classification, music classification, speech emotion classification, and sound event detection. Audio pattern recognition is an important research topic in the machine learning area and has attracted increasing research interest in recent years.

Early audio pattern recognition work focused on private datasets and applied hidden Markov models (HMMs) to classify three types of sounds, including wooden door open and shut, dropped metal, and poured water [2]. Recently, the Detection and Classification of Acoustic Scenes and Events (DCASE) challenge series [3] released publicly available acoustic scene classification and sound event detection datasets. However, it is still an open question how good an audio pattern recognition system can perform when trained on 100x larger datasets than the DCASE dataset. A milestone for audio pattern recognition was the release of AudioSet [4], a dataset containing over 5000 hours of audio recordings with 527 sound classes. We proposed pretrained audio neural networks (PANNs) [5] trained on the AudioSet dataset as backbone architectures for a variety of audio pattern recognition tasks. PANNs filled in the gaps among the pretrained models for computer audition, computer vision, and natural language processing.

PANNs contained a family of convolutional neural networks (CNNs) trained on the 5800 hours AudioSet dataset. There are several challenges in the training of PANNs. First, all audio recordings are weakly labelled. Each audio clip is only labelled with the presence or absence of sound events without their occurrence time. Some audio events such as gunshot only occur hundreds of milliseconds in an audio clip. PANNs proposed an end-to-end training strategy that takes the entire 10-second audio clip as input and directly predicts the presence or absence probability of the tags with weak label supervision. Then, a global pooling operation is applied to automatically detect the region of interests (ROIs) that is mostly likely to contain sound events. Second, the sound events in AudioSet are highly imbalanced. Sound events such as speech and music appear in over 40% of audio clips, while sound events such as toothbrush only appear in less than 0.01% of the dataset. PANNs proposed a balanced training strategy so that sound events in all sound classes are sampled uniformly to constitute a mini-batch for training. Third, sound classes with fewer training samples tend to overfit due to insufficient training data. PANNs applied spectrogram augmentation that randomly erases time and frequency stripes to augment data. In addition, PANNs applied the mixup technique that randomly mixes the waveform and targets of two audio clips to simulate more data.

PANNs explored several CNN architectures, including the time-domain CNNs, frequency-domain CNNs, and the combination of the time and frequency domain system called Wavegram-Logmel-CNN. PANNs investigated CNNs from 6 layers to 54 layers and showed the performance increases with more layers when trained on large-scale data. The 14-layer CNN achieves a good balance between the tagging performance and the computation complexity. The 14-layer CNN achieves a mean average precision (mAP) of 0.431, outperforming the AudioSet baseline system of 0.314. To combine the advantages of the time-domain and frequency-domain systems, PANNs proposed a Wavegram-Logmel-CNN architecture that consists of both Wavegram feature learned from raw waveforms and logarithmic mel spectrogram feature which further improved the tagging mAP to 0.439. PANNs trained on 5800 h data significantly outperform trained on 1% of data (58 h), with a mAP of 0.431 compared to 0.278. This result indicates that large-scale data is beneficial for training audio pattern recognition systems. On the other hand, PANNs investigated lightweight MobileNet-based systems with only 6.7% flops and 5.0% parameters compared to the CNN14 system while still achieves an mAP of 0.383. The lightweight PANNs are designed for deploying on portable devices with limited computation resources.

Recently, PANNs have been used as pretrained models for a wide range of audio pattern recognition tasks and have achieved several state-of-the-art results on several subtasks, including music genre tagging on the GTZAN dataset, audio tagging on the making sense of sounds (MSOS) dataset, and speech emotion recognition on the RAVDESS dataset by 2021. Users could use PANNs as feature extractors or finetune PANNs on their own data. PANNs have been adopted as official baseline systems for subtasks in the DCASE 2020 - 2022 challenges, the Holistic Evaluation of Audio Representations (HEAR) challenge 2021, and have been used in industry to construct the television program classification system in BBC [6]. Overall, PANNs are designed for audio pattern recognition in our daily life and can be used as backbone architectures for a wide range of audio-related tasks.

References:

[1] H. Meng, “Advancing Technological Equity in Speech and Language Processing: Aspects, Challenges, Successes, and Future Actions,” IEEE SPS Blog, 2022.

[2] J. P. Woodard, “Modeling and classification of natural sounds by product code hidden Markov models,” IEEE Transactions on Signal Processing, vol. 40, no. 7, pp. 1833-1835, July 1992, doi: https://dx.doi.org/10.1109/78.143457.

[3] A. Mesaros, T. Heittola, and T. Virtanen, “TUT database for acoustic scene classification and sound event detection,” in Proc. 2016 24th European Signal Processing Conference (EUSIPCO), 2016, pp. 1128-1132, doi: https://dx.doi.org/10.1109/EUSIPCO.2016.7760424.

[4] J. F. Gemmeke et al., “Audio Set: An ontology and human-labeled dataset for audio events,” in Proc. 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017, pp. 776-780, doi: https://dx.doi.org/10.1109/ICASSP.2017.7952261.

[5] Q. Kong, Y. Cao, T. Iqbal, Y. Wang, W. Wang, and M. D. Plumbley, “PANNs: Large-Scale Pretrained Audio Neural Networks for Audio Pattern Recognition,” in IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 2880-2894, 2020, doi: https://dx.doi.org/10.1109/TASLP.2020.3030497.

[6] L. Pham, C. Baume, Q. Kong, T. Hussain, W. Wang, and M. Plumbley, “An Audio-Based Deep Learning Framework For BBC Television Programme Classification,” in Proc. 2021 29th European Signal Processing Conference (EUSIPCO), 2021, pp. 56-60, doi: https://dx.doi.org/10.23919/EUSIPCO54536.2021.9616310.

SPS on Twitter

- DEADLINE EXTENDED: The 2023 IEEE International Workshop on Machine Learning for Signal Processing is now accepting… https://t.co/NLH2u19a3y

- ONE MONTH OUT! We are celebrating the inaugural SPS Day on 2 June, honoring the date the Society was established in… https://t.co/V6Z3wKGK1O

- The new SPS Scholarship Program welcomes applications from students interested in pursuing signal processing educat… https://t.co/0aYPMDSWDj

- CALL FOR PAPERS: The IEEE Journal of Selected Topics in Signal Processing is now seeking submissions for a Special… https://t.co/NPCGrSjQbh

- Test your knowledge of signal processing history with our April trivia! Our 75th anniversary celebration continues:… https://t.co/4xal7voFER